State → Action → Reward

State → Action → Reward

Couldn't load pickup availability

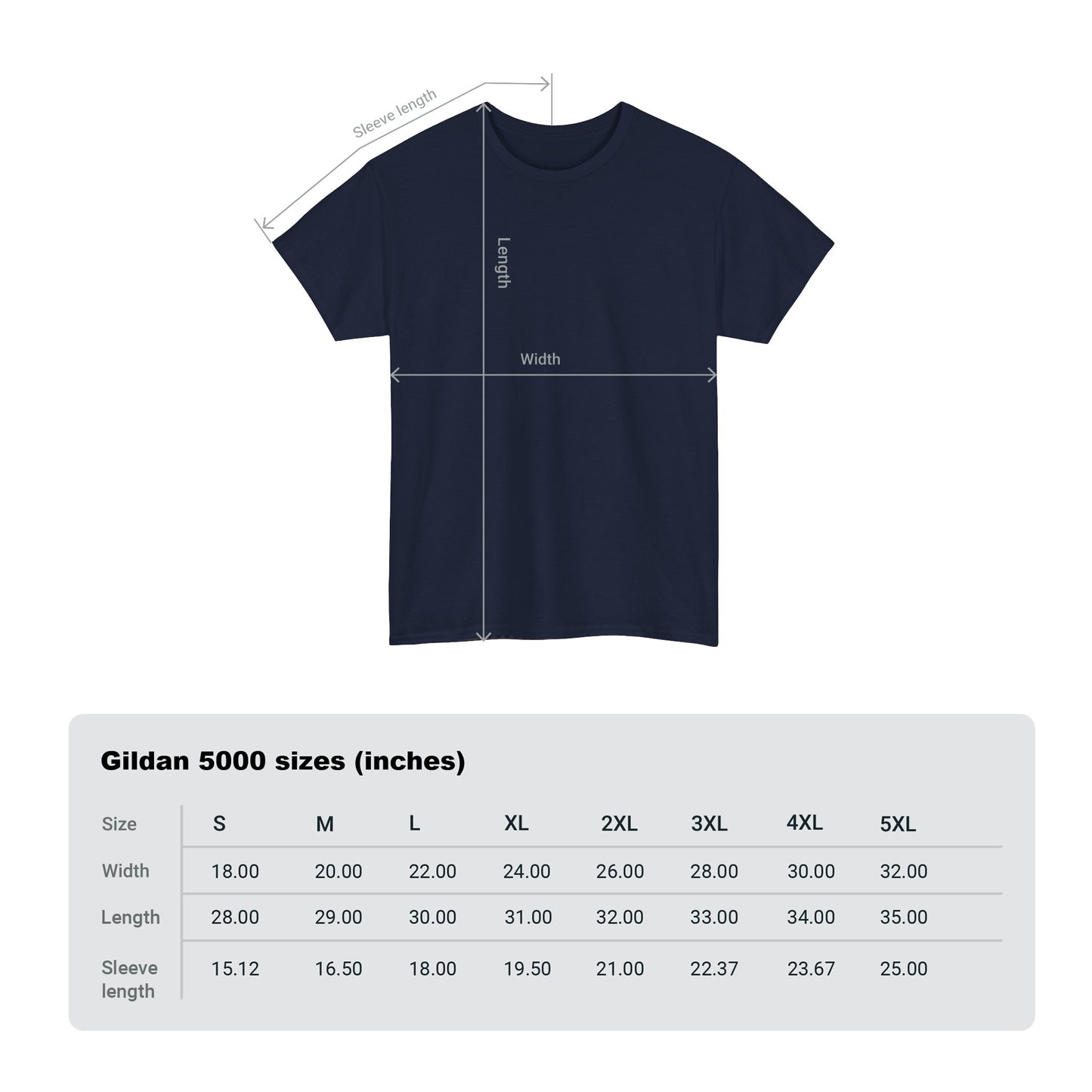

Features the iconic AlphaGo game board with move numbers, rendered in a three-color scheme that emphasizes the state-action-reward cycle. The design includes the subtle fine print "*reward hacking since 1957".

AI Context: Illustrates core concepts of reinforcement learning through the lens of Go gameplay. References both early RL concepts from the 1950s and modern achievements like AlphaGo, highlighting the continuous evolution of reward-based learning.

Art Inspiration: Based on the historic Lee Sedol vs AlphaGo match, particularly game 37's famous move. The design combines traditional Go board aesthetics with modern data visualization principles, creating a bridge between classical game theory and contemporary AI.

Fun Fact: The "since 1957" reference points to Arthur Samuel's pioneering work on reinforcement learning for playing checkers, which was one of the first examples of a self-learning program. Samuel's program eventually beat Connecticut's checkers champion in 1962, foreshadowing AlphaGo's victory by more than five decades.

Share